Module 01 - Overview of the Generative Application Landscape

Generative AI Landscape

Artificial intelligence (AI) has rapidly integrated into our daily lives, moving from the realm of science fiction to an omnipresent reality. A prime example of this phenomenon is the remarkable ascent of AI chatbots like ChatGPT, which reached 100 million households within a few months—a feat that traditional landline telephones took 75 years to achieve.

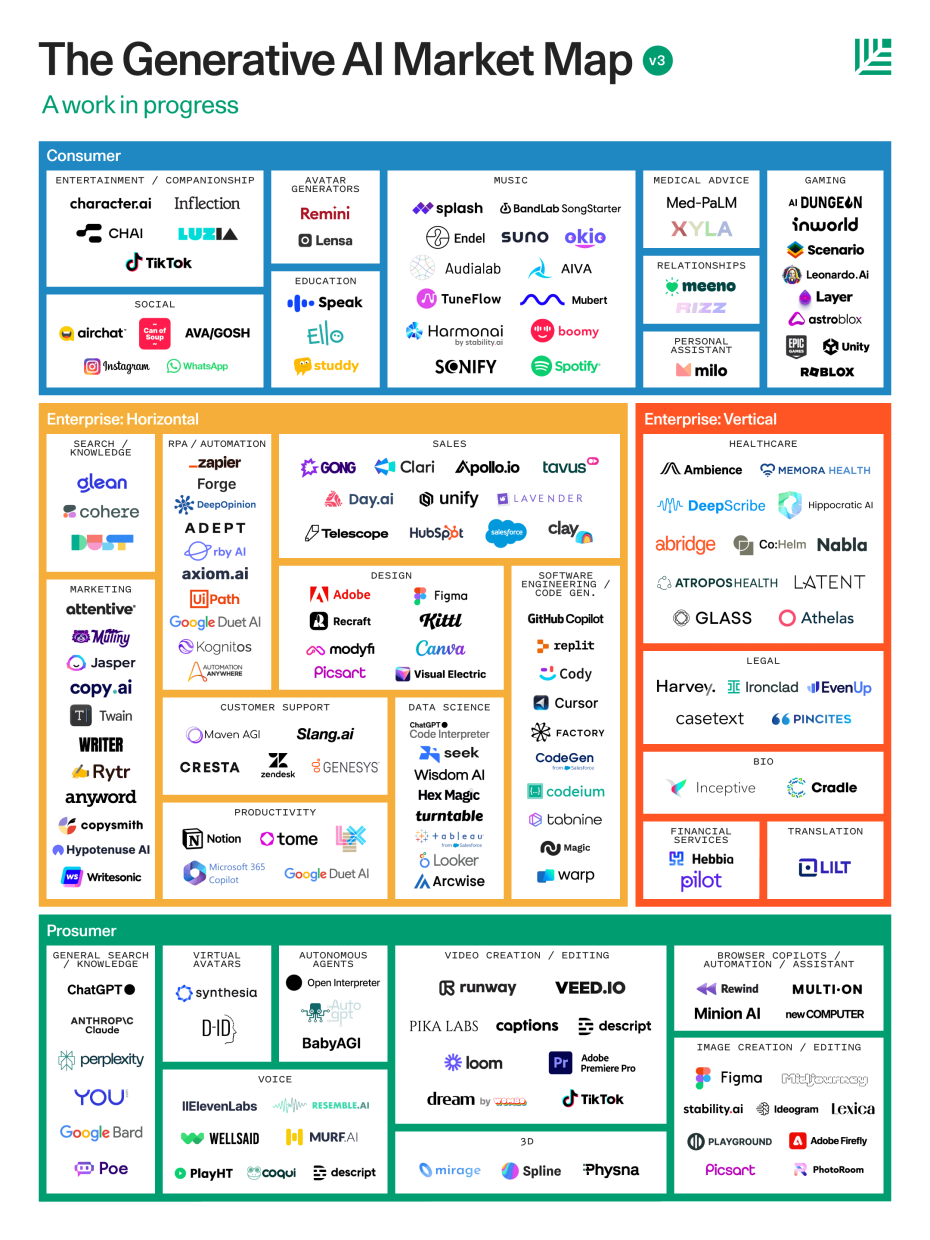

The generative AI landscape has exploded globally over the past couple of years. It is impossible to calculate the exact number of total AI applications on the market, but some sites estimate the figure at hundreds to thousands daily.

Table of Contents

- Generative AI Landscape

- AI Tools on the Market

- AI Models for Research and Software Development

- Bio-Related LLMs

AI Tools on the Market

Based on Tracxn, there are an estimated 18,563 AI startups in the United States.

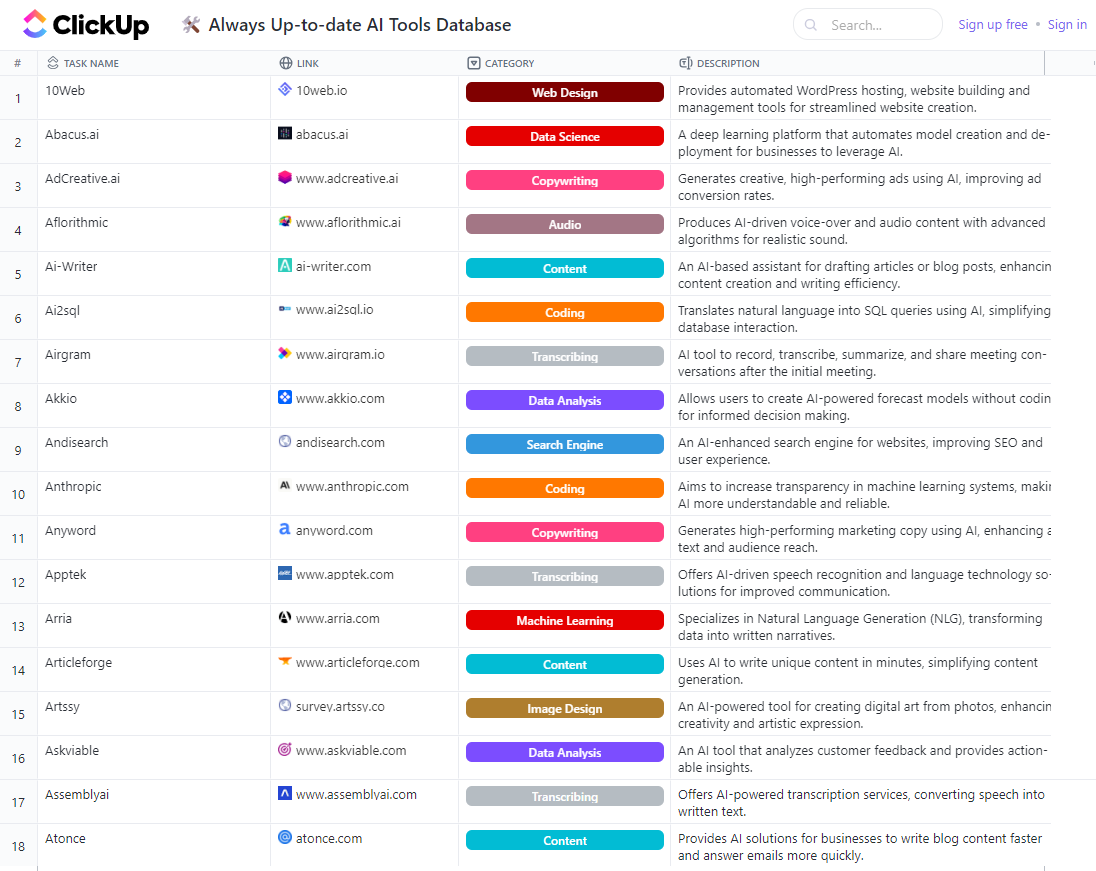

We are flooded online with new AI tools daily. Luckily, there are several efforts to map the AI tools market, such as the blog and database from ClickUp which is frequently updated.

Alt Text: A screen capture of a spreadsheet with hundreds of rows and several columns (task name, link, category, description) (Source: ClickUp)

Alt Text: An organized cheatsheet of the “generative AI market map” with software brands for the following categories: consumer, enterprise: horizontal, enterprise: vertical, and prosumer for all aspects of digital life spanning social, business, legal, healthcare, general search, and entertainment. (Source: Sonya Huang; Sequoia)

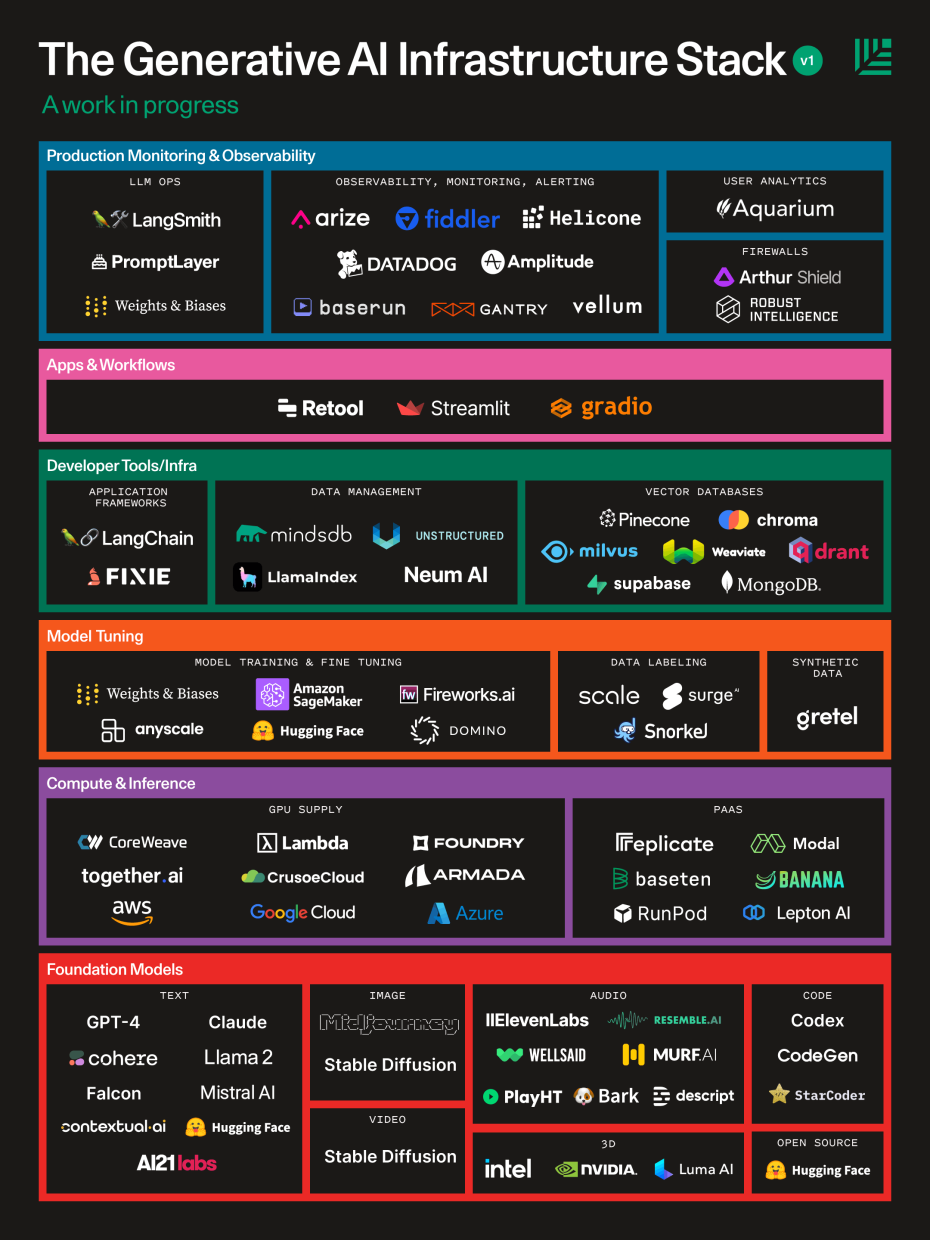

Alt Text: An organized cheatsheet of the “generative AI infrastructure developer stack” with software brands for the following categories: production monitoring & observability, apps & workflows, developer tools, model tuning, compute & inference, and foundation models. (Source: Sonya Huang,Sequoia)

AI Models for Research and Software Development

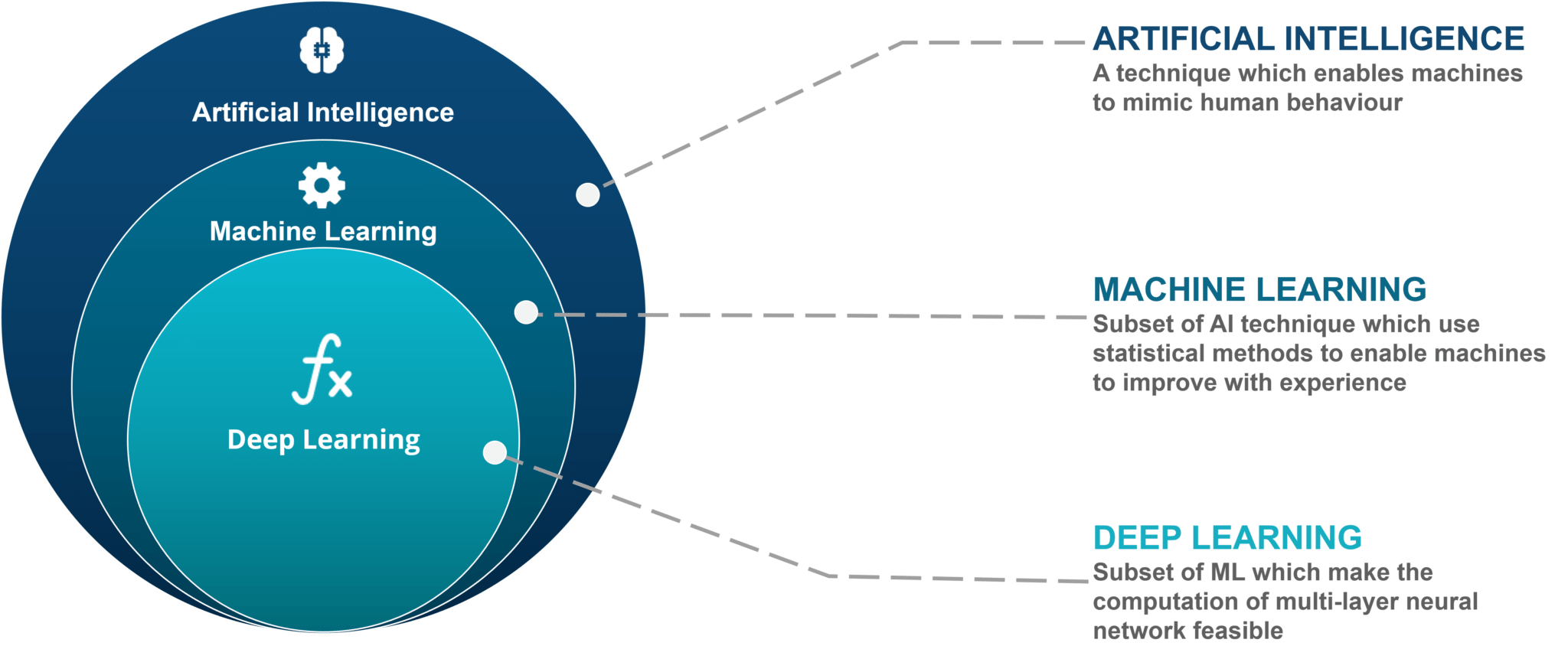

Sometimes the terms artificial intelligence (AI), machine learning (ML), and deep learning (DL) are used interchangeably, but they do have their distinctions. For the sake of brevity:

- AI empcompasses ML and DL

- ML is a subset of AI

- DL is a further subset of ML

Alt Text: Image showing three cascading circles with deep learning nested inside the machine learning circle which is nested with the biggest artificial intelligence circle. (Source: Edureka.com)

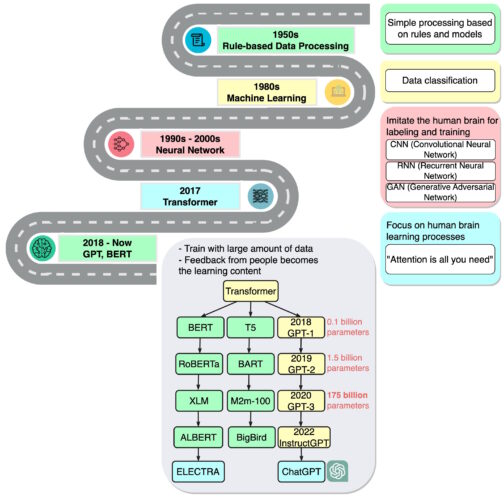

The current trends in AI research and applications all build on decades of research. It would be impossible to adequately cover the numerous types of supervised learning, unsupervised learning, and semi-supervised learning models. Below is a roadmap of the ML history which has led to today’s GPT and BERT applications.

Alt Text: A graphic of a road starting in the 1950’s with rule-based data processing which relies on simple processing on rules and models, then the road curves to 1980’s machine learning based on data classification, then to 1990’s to 2000’s neural networks which imitate the human brain for labeling and training such as CNN, RNN, and GAN, then to 2017’s transformer models which focus on human brain learning processes, and finally in 2018 to present with GPT and BERT which were trained with massive data and the feedback from people becomes the learning content. (Source: NVIDIA Blog/blog.bytebytego.com)

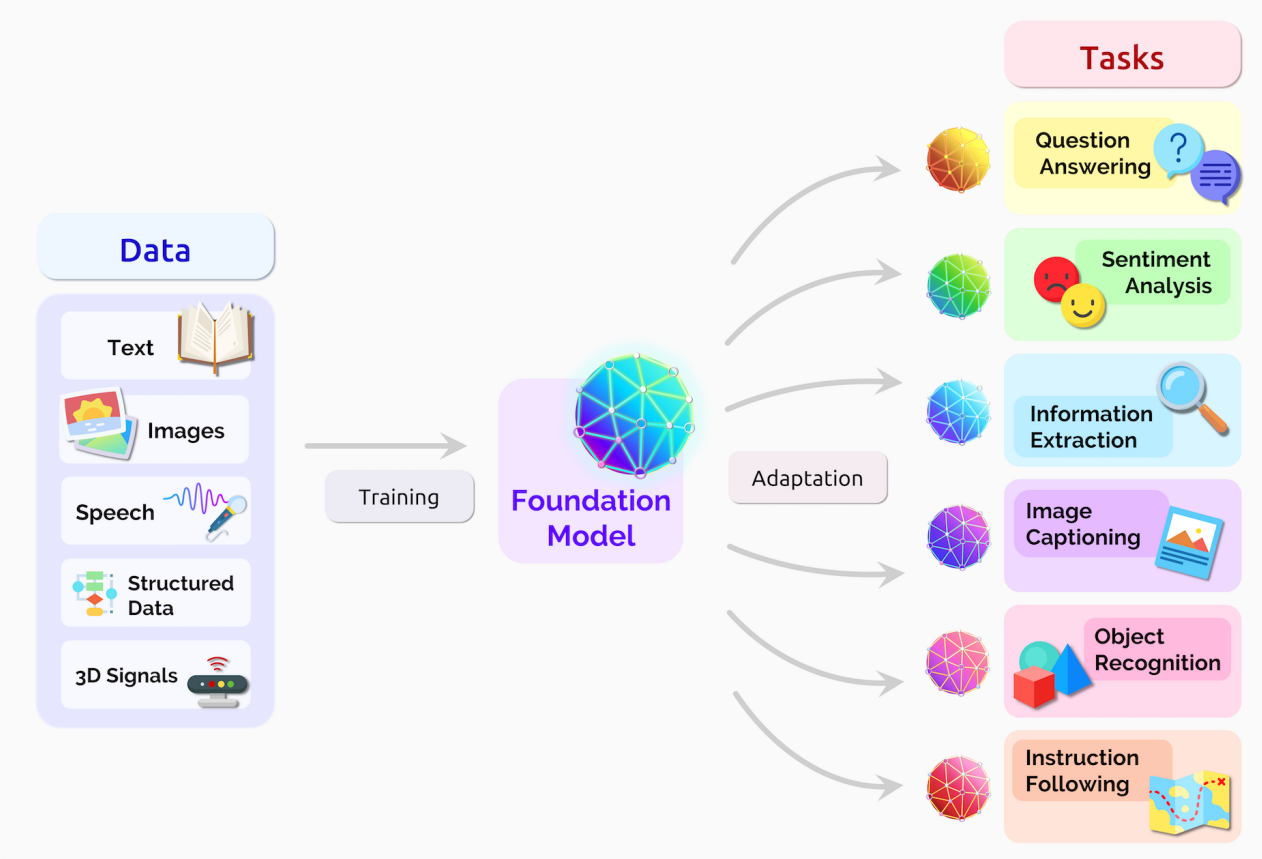

A blogpost from NVIDIA describes the foundation model is considered a “paradigm for building AI systems” since these AI neural networks are trained on massive unlabeled datasets and applicable across a variety of tasks. The figure below (from a 214-page collaborative paper from Stanford) does a good job at illustrating this:

Alt Text: A flowchart from left to right, which starts with a column for “data” (text, images, speech, structured data, 3D signals) with an arrow labeled “training” pointing to a “foundation model” graphic in the middle, and then an arrow labeled with “adaptation” pointing to multiple tasks in the far-right “tasks” column containing a list (question answering, sentiment analysis, information extraction, image captioning, object recognition, and instruction following) (Source: 2021 arXiv)

A year after the term “foundation models” was coined, another term “generative AI” also emerged as an umbrella broadly covering for transformers, large language models, diffusion models, and other neural networks which can create a wide range of content from text, images, video, code, and even music.

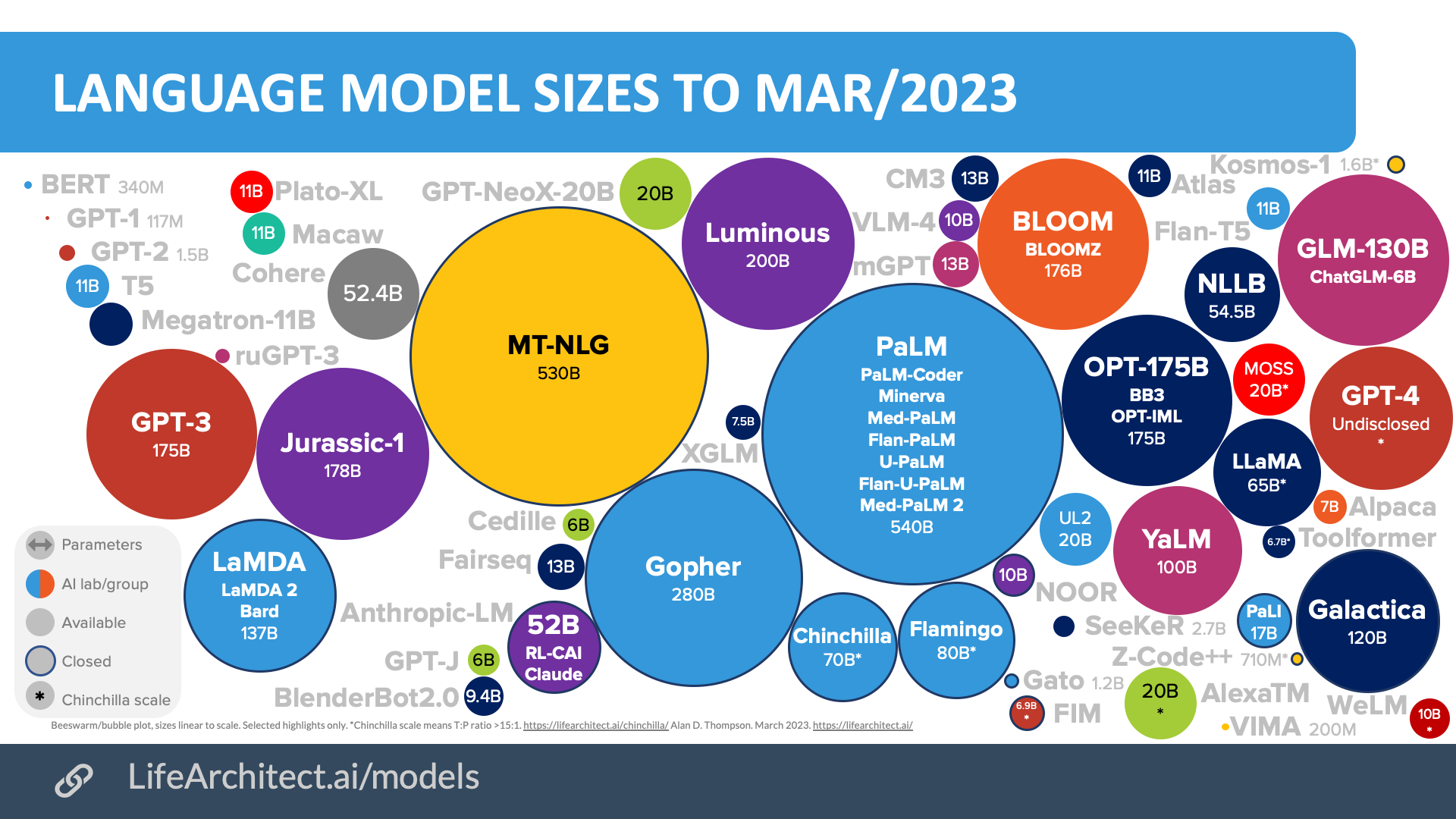

Alt Text: (Source: lifearchitect.ai)

We will not elaborate on the current state of large language models, but this site provides a fairly comprehensive summary for those interested in learning more. Some of the topics covered:

- Language model sizes & predictions

- Emergent abilities of LLMs

- Several industry models from Google, Meta, OpenAI, Baidu, etc.

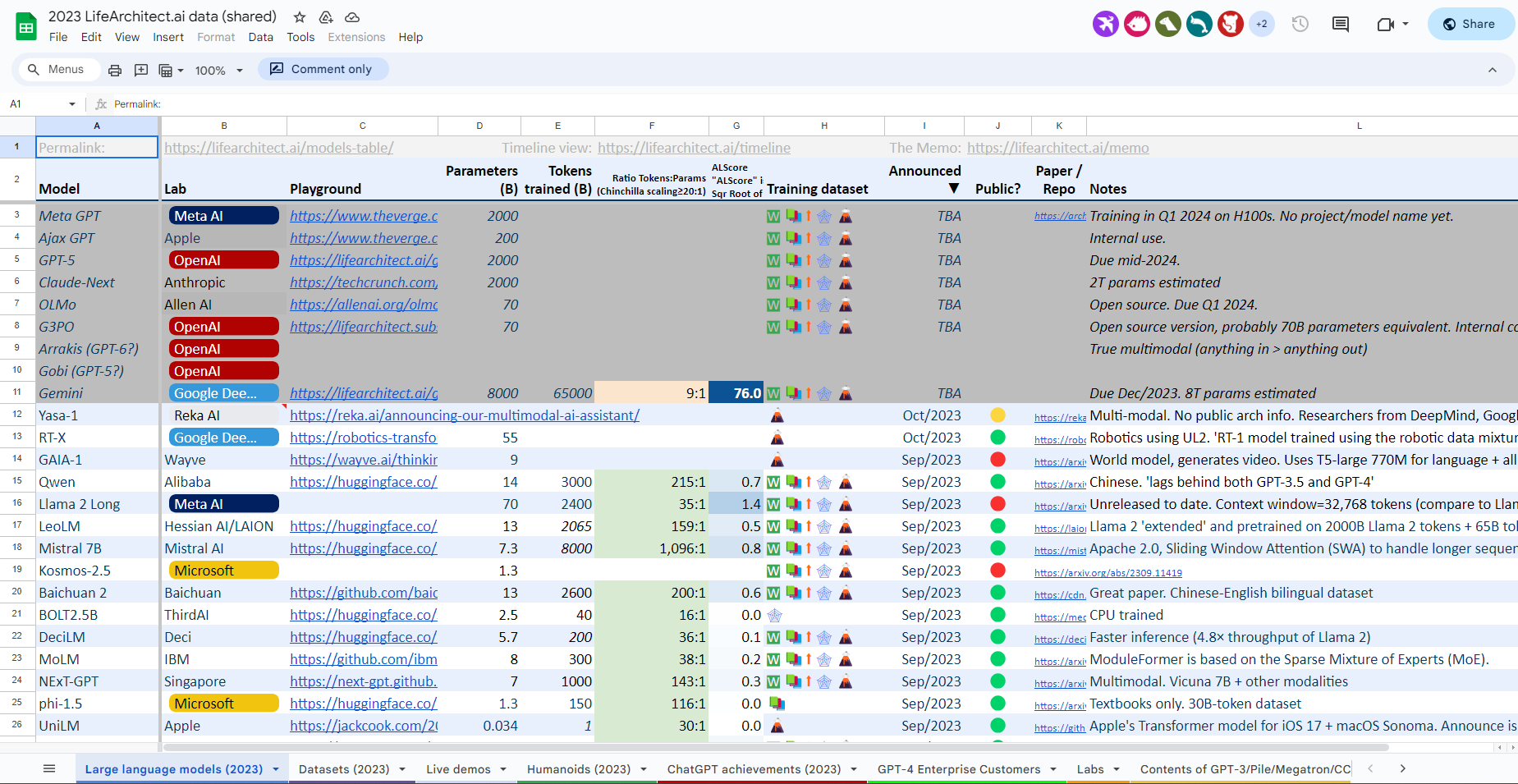

Also included is an up-to-date Google Sheet summarizing details about current large language models.

Alt Text: (Source: lifearchitect.ai)

Bio-Related LLMs

Additionally, there are several collections on HuggingFace which pull together biomedical, biochemical, biological, and clinical models which may be of interest to folks!